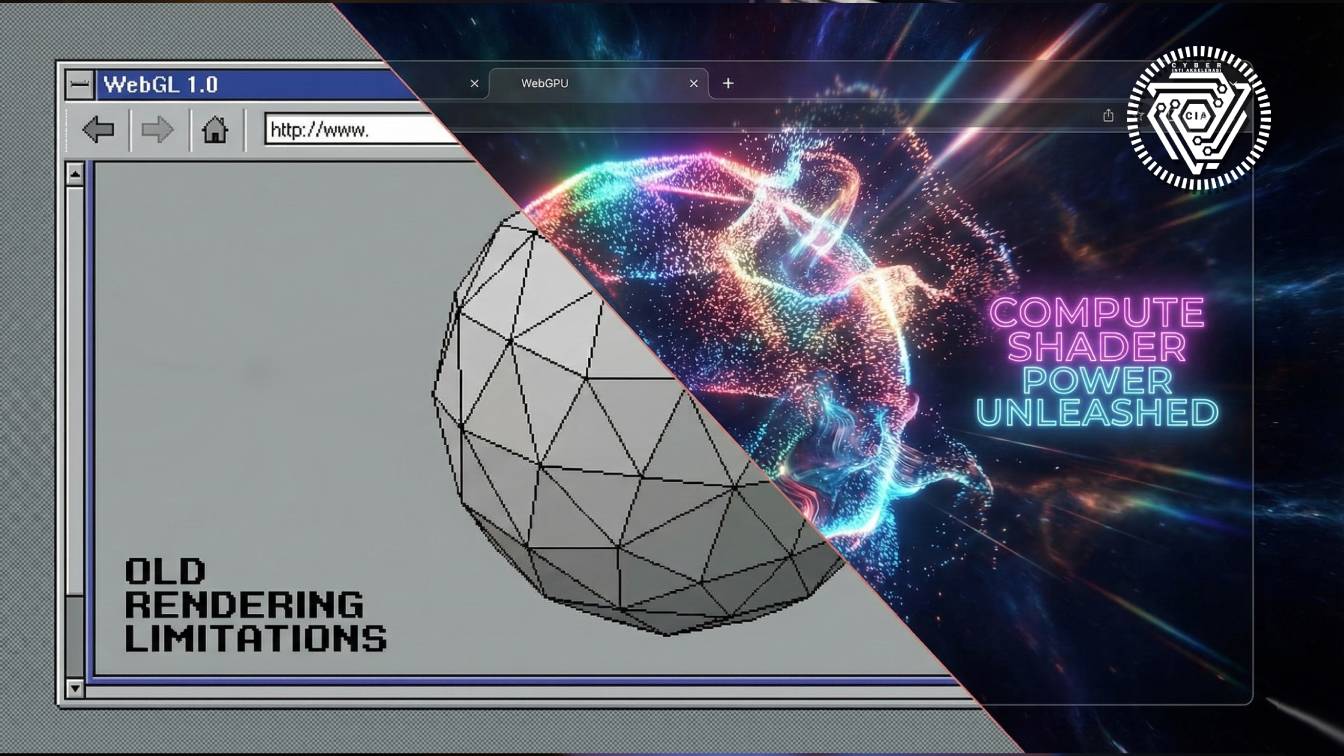

For a decade, 3D graphics on the web were limited by WebGL, an old OpenGL-based API not designed for modern GPUs. 2026 is the year where the WebGPU standard finally matures and is universally adopted. The difference isn't just an FPS (Frame per Second) boost, but a fundamental architectural shift. WebGPU gives low-level access to graphics hardware, similar to DirectX 12 or Vulkan, cutting the driver overhead that has been choking browser performance.

Compute Shaders: The Real Power

The most revolutionary feature of WebGPU is Compute Shaders. WebGL only focused on drawing triangles (graphics). WebGPU allows us to use the GPU for general mathematical calculations (GPGPU) in massive parallel. The implication? We can run complex physics simulations (fluids, cloth, particles) or even AI model inference (like Stable Diffusion) directly in a browser tab without backend servers. The browser transforms into a mini OS.

Impact on Web Development

For developers, this means the death of heavy plugins. CAD apps, professional video editing, and AAA games can now run native on the web with performance approaching desktop apps. At CybermaXia, we are migrating client 3D data visualizations from WebGL-based Three.js to new WebGPU-based engines. The result? Visualizations of millions of data points that used to lag now run smoothly at 60fps. This is the biggest leap in interactive web history.

However, the challenge is the learning curve. WebGPU code is much more verbose and complex than WebGL. This demands stricter engineering discipline in graphics memory management to avoid browser crashes.